Generative Artificial Intelligence (AI) stands at the forefront of innovation, reshaping the boundaries of creativity and technology. It represents a paradigm shift in AI research, enabling machines to autonomously produce original content across various domains, including artwork, music, literature, and design.

This technology emerged from the intersection of deep learning, neural networks, and computational creativity. It builds upon the concept of generative models, which aim to understand and replicate patterns in data to create new, synthetic outputs. This technology has its roots in early research on neural networks and probabilistic models, gradually evolving into sophisticated algorithms capable of generating complex and diverse content.

powering creativity in digital world

The concept of generative AI gained prominence with the rise of deep learning techniques, particularly generative adversarial networks (GANs) and variational autoencoders (VAEs). These architectures enable machines to learn and generate data that closely resembles real-world examples by training on large datasets.

The emergence of generative AI has been fueled by advances in computational power, algorithmic innovations, and the availability of vast amounts of data. With the exponential growth of digital content and the proliferation of online platforms, there’s an increasing demand for automated tools that can assist in content creation, curation, and personalization.

Generative AI has sparked excitement and speculation across various industries, from entertainment and advertising to healthcare and finance. Its potential to democratize creativity, enhance productivity, and drive innovation is unparalleled, promising to transform the way we create, collaborate, and interact with technology.

In the following sections, we’ll explore how it is revolutionizing different fields and shaping the future of artificial intelligence.

Working Methodology of Generative AI:

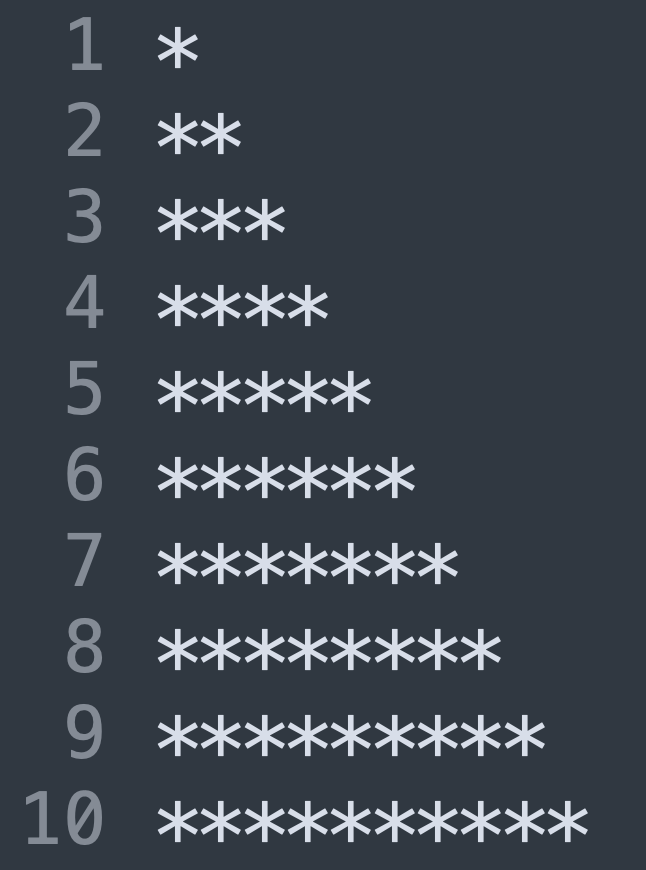

1. Learning from Data:

Generative AI begins by learning from a vast dataset, extracting patterns, styles, and structures inherent in the data. This process involves training using techniques like neural networks, which analyze the data and identify correlations.

Example: Training a Generative Adversarial Network (GAN)

# Import necessary libraries

import tensorflow as tf

from tensorflow.keras import layers

# Define the generator model

generator = tf.keras.Sequential([

layers.Dense(256, activation='relu', input_shape=(100,)),

layers.Dense(784, activation='sigmoid'),

layers.Reshape((28, 28))

])

# Define the discriminator model

discriminator = tf.keras.Sequential([

layers.Flatten(input_shape=(28, 28)),

layers.Dense(256, activation='relu'),

layers.Dense(1, activation='sigmoid')

])

# Define the GAN model

gan = tf.keras.Sequential([generator, discriminator])

Explanation:

- The code defines a GAN architecture consisting of a generator and a discriminator.

- The generator takes random noise as input (a 1D array of shape

(100,)) and produces images of size 28×28 pixels. - The discriminator takes images of size 28×28 pixels as input and outputs a single probability indicating whether the input image is real or generated.

- The GAN model combines the generator and discriminator sequentially, where the generator’s output is fed into the discriminator.

2. Generating New Content:

Once trained, generative AI can autonomously generate new content by sampling from the learned patterns. This involves providing an initial input to the AI, which then generates output based on the learned correlations.

Example: Generating Images with a Trained GAN

# Generate random noise as input for the generator

noise = tf.random.normal([1, 100])

# Use the generator to produce an image from the noise

generated_image = generator(noise, training=False)

Explanation:

- The code generates random noise using TensorFlow’s

tf.random.normalfunction. This noise serves as input for the generator. - The generator is called with the generated noise as input to produce an image.

- Setting

training=Falseensures that the generator operates in inference mode, preventing any internal layers from being modified.

3. Refining the Output:

The generated output may undergo iterative refinement to improve its quality. This process may involve fine-tuning or optimization techniques to enhance the generated content further.

Example: Refining Generated Images

# Evaluate the discriminator's classification of the generated image

decision = discriminator(generated_image)

# Calculate the loss between the generated image and real images

gen_loss = tf.keras.losses.BinaryCrossentropy()(tf.ones_like(decision), decision)

Explanation:

- The code evaluates the discriminator’s classification of the generated image by passing it through the discriminator model.

- The decision variable contains the discriminator’s output, which is a probability indicating whether the generated image is real or fake.

- The code calculates the generator loss using binary cross-entropy. It compares the discriminator’s classification of the generated image to the expected output (all ones, indicating that the generated image should be classified as real). This loss quantifies how well the generator is fooling the discriminator into believing that its generated images are real.

Famous Generative AIs:

1. DALL-E:

Overview: DALL-E, developed by OpenAI, is a state-of-the-art generative AI model capable of generating images from textual descriptions. It builds upon the GPT-3 architecture and leverages techniques from both computer vision and natural language processing domains.

Functionality: DALL-E takes textual input describing an image concept and generates corresponding images. It can understand complex prompts and generate high-quality images with remarkable detail and fidelity.

Example: For example, given a textual prompt like “a two-story pink house shaped like a shoe,” DALL-E can produce an image that matches this description, demonstrating its ability to understand and translate abstract concepts into visual representations.

2. ChatGPT:

Overview: ChatGPT, also developed by OpenAI, is an advanced conversational AI model based on the GPT (Generative Pre-trained Transformer) architecture. It is trained on vast amounts of text data and excels at generating human-like responses in natural language conversations.

Functionality: ChatGPT can engage in meaningful and coherent conversations on a wide range of topics. It can understand context, maintain dialogue coherence, and generate responses that are contextually relevant and linguistically fluent.

Example: In a conversation with ChatGPT, users can ask questions, seek advice, or engage in casual chat, and the model will respond appropriately, demonstrating its conversational prowess and ability to generate text that resembles human speech.

3. AI Dungeon:

Overview: AI Dungeon, powered by a generative AI model known as “Bard,” is an interactive text-based adventure game where players can explore limitless possibilities in a dynamically generated world.

Functionality: Bard, the underlying generative AI model in AI Dungeon, generates text-based narratives in real-time based on user input and interactions. It creates immersive and personalized storytelling experiences where players can shape the narrative direction through their actions and choices.

Example: Players can enter any scenario or storyline they imagine, and Bard will generate a unique and interactive narrative in response to their inputs. This allows for endless creativity and exploration within the game world, making each playthrough a unique storytelling adventure.

Generative AI in arts, music literature and design

1. Generative AI in Artwork:

Generative AI revolutionizes the creation of visual art by autonomously generating paintings, illustrations, and digital art pieces. Artists can leverage generative AI to explore new styles, generate abstract compositions, or even collaborate with the AI as a creative partner.

Use Case: Style Transfer

One popular use case is style transfer, where generative AI algorithms apply the style of one image to another. Let’s see a simple implementation of style transfer using Python with TensorFlow and Keras:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.applications import vgg19

from tensorflow.keras.preprocessing import image as kp_image

from tensorflow.keras import Model

def load_img(path_to_img):

max_dim = 512

img = kp_image.load_img(path_to_img)

img = kp_image.img_to_array(img)

img = tf.image.resize(img, (max_dim, max_dim))

img = img/255.0

return img

def imshow(image, title=None):

if len(image.shape) > 3:

image = tf.squeeze(image, axis=0)

plt.imshow(image)

if title:

plt.title(title)

def load_and_process_img(path_to_img):

img = load_img(path_to_img)

img = tf.keras.applications.vgg19.preprocess_input(img)

return img

def deprocess_img(processed_img):

x = processed_img.copy()

if len(x.shape) == 4:

x = np.squeeze(x, 0)

assert len(x.shape) == 3, ("Input to deprocess image must be an image of "

"dimension [1, height, width, channel] or [height, width, channel]")

if len(x.shape) != 3:

raise ValueError("Invalid input to deprocessing image")

x[:, :, 0] += 103.939

x[:, :, 1] += 116.779

x[:, :, 2] += 123.68

x = x[:, :, ::-1]

x = np.clip(x, 0, 255).astype('uint8')

return x

def style_content_model():

vgg = tf.keras.applications.VGG19(include_top=False, weights='imagenet')

vgg.trainable = False

style_outputs = [vgg.get_layer(name).output for name in ['block1_conv1', 'block2_conv1', 'block3_conv1', 'block4_conv1', 'block5_conv1']]

content_outputs = [vgg.get_layer('block4_conv2').output]

model_outputs = style_outputs + content_outputs

return Model(vgg.input, model_outputs)

def gram_matrix(input_tensor):

result = tf.linalg.einsum('bijc,bijd->bcd', input_tensor, input_tensor)

input_shape = tf.shape(input_tensor)

num_locations = tf.cast(input_shape[1]*input_shape[2], tf.float32)

return result/(num_locations)

def style_loss(style, combination):

style = gram_matrix(style)

combination = gram_matrix(combination)

channels = 3

size = img_height*img_width

return tf.reduce_sum(tf.square(style - combination))/(4.0 * (channels ** 2) * (size ** 2))

def total_variation_loss(image):

return tf.reduce_sum(tf.image.total_variation(image))

def compute_loss(model, loss_weights, init_image, gram_style_features, content_features):

style_weight, content_weight = loss_weights

model_outputs = model(init_image)

style_output_features = model_outputs[:num_style_layers]

content_output_features = model_outputs[num_style_layers:]

style_score = 0

content_score = 0

weight_per_style_layer = 1.0 / float(num_style_layers)

for target_style, comb_style in zip(gram_style_features, style_output_features):

style_score += weight_per_style_layer * style_loss(target_style, comb_style)

weight_per_content_layer = 1.0 / float(num_content_layers)

for target_content, comb_content in zip(content_features, content_output_features):

content_score += weight_per_content_layer* content_loss(target_content, comb_content)

style_score *= style_weight

content_score *= content_weight

loss = style_score + content_score

return loss, style_score, content_score

def compute_grads(cfg):

with tf.GradientTape() as tape:

all_loss = compute_loss(**cfg)

total_loss = all_loss[0]

return tape.gradient(total_loss, cfg['init_image']), all_loss

def run_style_transfer(content_path, style_path, num_iterations=1000, content_weight=1e3, style_weight=1e-2):

global img_height, img_width, num_content_layers, num_style_layers

content_image = load_and_process_img(content_path)

style_image = load_and_process_img(style_path)

img_height, img_width = content_image.shape[1:3]

vgg = tf.keras.applications.VGG19(include_top=False, weights='imagenet')

num_content_layers = len(content_layers)

num_style_layers = len(style_layers)

style_extractor = vgg_style_model()

style_outputs = style_extractor(style_image*255)

gram_style_features = [gram_matrix(style_output) for style_output in style_outputs]

content_features = vgg(content_image*255)[num_content_layers:]

style_features = vgg(style_image*255)[:num_style_layers]

init_image = tf.Variable(content_image)

opt = tf.optimizers.Adam(learning_rate=5, beta_1=0.99, epsilon=1e-1)

loss_weights = (style_weight, content_weight)

cfg = {

'model': vgg,

'loss_weights': loss_weights,

'init_image': init_image,

'gram_style_features': gram_style_features,

'content_features': content_features

}

for i in range(num_iterations):

grads, all_loss = compute_grads(cfg)

loss, style_score, content_score = all_loss

opt.apply_gradients([(grads, init_image)])

clipped = tf.clip_by_value(init_image, clip_value_min=0.0, clip_value_max=1.0)

init_image.assign(clipped)

if i % 100 == 0:

print("Iteration:", i)

print('Total loss:', loss.numpy())

print('Style loss:', style_score.numpy())

print('Content loss:', content_score.numpy())

return deprocess_img(init_image.numpy())

content_path = 'content.jpg'

style_path = 'style.jpg'

result = run_style_transfer(content_path, style_path)

plt.imshow(result)

plt.show()

This code snippet demonstrates how to apply style transfer using VGG19 architecture in TensorFlow. With just a few lines of code, artists can merge the style of one image with the content of another, producing captivating and unique artworks.

2. Generative AI in Music:

Generative AI transforms music composition by autonomously generating melodies, harmonies, and rhythms. Musicians can utilize generative AI to explore new musical styles, create dynamic compositions, and even assist in the production process.

Use Case: Music Generation

One powerful application is music generation, where generative AI algorithms create original pieces of music. Below is a simple example of music generation using Python with TensorFlow and Magenta:

import magenta.music as mm

from magenta.models.performance_rnn import performance_sequence_generator

# Load the pre-trained model

bundle = mm.sequence_generator_bundle.read_bundle_file('performance_with_dynamics.mag')

generator_map = performance_sequence_generator.get_generator_map()

generator = generator_map['performance_with_dynamics'](checkpoint=None, bundle=bundle)

# Generate a music sequence

input_sequence = mm.sequences_lib.PrimerSequence(

magenta.music.Melody(

[mm.music_pb2.NoteSequence(text_proto=n) for n in ['...']]

),

[mm.MusicPerformance.DEFAULT_VELOCITY],

)

gen_sequence = generator.generate(input_sequence)

# Save the generated sequence to MIDI

mm.sequence_proto_to_midi_file(gen_sequence, 'generated_music.mid')

This code snippet demonstrates how to generate music using a pre-trained performance model from Magenta. Musicians can use generative AI to create entire musical compositions or generate ideas to inspire their own compositions.

3. Generative AI in Literature:

Generative AI revolutionizes literature by autonomously generating stories, poems, and prose. Writers can leverage it to explore new narrative structures, develop characters, and even overcome writer’s block.

Use Case: Text Generation

A prominent application is text generation, where generative AI algorithms produce original written content. Let’s look at a simple implementation of text generation using Python with TensorFlow and the GPT-2 model:

import tensorflow as tf

from transformers import GPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")

prompt_text = "Once upon a time, in a land far, far away"

inputs = tokenizer.encode(prompt_text, return_tensors="pt")

output = model.generate(inputs, max_length=200, num_return_sequences=1)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_text)

This code snippet demonstrates how to generate text using the GPT-2 model from the Hugging Face Transformers library. Writers can use generative AI to generate story ideas, explore different writing styles, or even create entire chapters of a novel.

4. Generative AI in Design:

Generative AI redefines design by autonomously generating visual concepts, layouts, and structures. Designers can leverage generative AI to explore new aesthetics, optimize designs, and streamline the creative process.

Use Case: Image Generation

An impactful application is image generation, where generative AI algorithms create visual content from scratch. Here’s a simple example of image generation using Python with TensorFlow and the DALL-E model:

import tensorflow as tf

import numpy as np

from PIL import Image

# Load the pre-trained DALL-E model

model = tf.saved_model.load("path/to/dalle_model")

# Generate an image

latent_vector = np.random.randn(1, 32).astype("float32")

generated_image = model(latent_vector)["image"]

# Convert the generated image to a PIL image

generated_image = (generated_image.numpy().squeeze() * 255).astype(np.uint8)

generated_image = Image.fromarray(generated_image)

# Display the generated image

generated_image.show()

This code snippet demonstrates how to generate images using the DALL-E model. Designers can use generative AI to create visual concepts, generate design elements, or even generate entire layouts for websites or products.

Conclusion

The boundless creativity of generative AI transcends traditional boundaries, opening new avenues of exploration and innovation across diverse domains. From generating artwork that challenges artistic norms to assisting scientists in solving complex problems, generative AI continues to push the limits of what is possible.

As we embrace this technology with open minds and innovative spirits, we unlock endless possibilities for human creativity and ingenuity. Whether it’s in the realm of art, music, literature, or science, generative AI serves as a catalyst for inspiration, collaboration, and discovery.

Endnote

Thank you for taking the time to explore the boundless creativity of generative AI with us. We value your feedback and would love to hear your thoughts on this topic. Please feel free to leave your comments and share your insights below.

If you enjoyed this article and would like to stay updated on the latest developments in AI and creativity, consider subscribing to our newsletter. By subscribing, you’ll receive regular updates, exclusive content, and insights delivered straight to your inbox.

If you an aspirant of data science job roles, we have compiled frequently asked interview questions for you

If you would like to explore further in machine learning you can check fundamentals of machine learning in our website

Pingback: Interview questions on Generative AI

Pingback: Generative AI on creative fields