In this post, I am going to give a summary of nine key papers from the distributional reinforcement learning (DRL) area.

Paper 001 : A Distributional Perspective on Reinforcement Learning

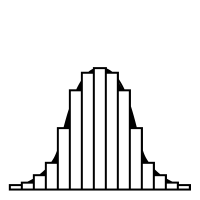

This is the seminal paper in this area. The key idea of the paper is the argument that the value distribution is important in reinforcement learning and leads to better results. The conventional RL uses the expected value of return at any state while the Distributional RL takes the distribution of the same into consideration. Here the authors have defined value distribution as the distribution of random return received by the reinforcement learning agent. A tutorial blog post about the paper is available here.

They state that there is an established body of literature studying the value distribution but focuses on implementing risk-aware behaviour. Can we use the information available in the value distribution to detect potential risk and avoid using the same?. If yes, how?. What are those papers dealing with risk-aware behaviour with return distribution?

They claim to provide theoretical results in policy evaluation and control. This is further supported along with empirical evidence. The authors admit that there is some instability in the policy control part, why?. It is claimed that the approximate value distribution is learned in the process. What is an approximate value distribution?. Few unfamiliar terms named approximate reinforcement learning and anecdotal evidence are used. What are those ?.

Paper 002: Distributional Reinforcement Learning With Quantile Regression

Here the authors state that the objective is to learn value distribution instead of value function. A term usage named algorithmic result is used in the abstract. This paper fixes the problem of the seminal paper. In the seminal paper the distributional RL algorithm couldn’t use the Wasserstein metric for Stochastic Gradient Descent algorithm. Here in this paper, they are using quantile regression to stochastically adjust the distributions.

Paper 003: Implicit Quantile Networks For Distributional Reinforcement Learning

Not exactly sure how this paper is different from the paper:002. The application of quantile regression to approximate the full quantile function for state-action return distribution is mentioned. Whether the earlier papers use distribution to model state return or to model state-action return?. Here the implicitly defined distribution is claimed to study the effects of risk-sensitive policies in Atari games.

Paper 004 : Dopamine: A Research Framework For Deep Reinforcement Learning

This is a research framework with code repository to ease the implementation of Deep Reinforcement Learning algorithms. The code is written in Python. The authors claim to provide compact and reliable implementations of some state-of-the-art deep RL agents although it is not an exhaustive list of all algorithms. They also claim to provide a taxonomy of different research objectives in deep RL research.

Paper 005: Information-Directed Exploration For Deep Reinforcement Learning

This paper primarily deals with the exploration problem in reinforcement learning. They say that the variability of return depends on the current state and actions and is therefore heteroscedastic. Classical exploration strategies like upper confidence bound and Thompson sampling fails because of this even in a bandit setting. Suppose if I change my arm models with heteroscedastic properties will my epsilon-greedy algorithm fail?. They are proposing an Information-Directed Sampling for exploration in Reinforcement Learning. They claim that the resulting exploration strategy is capable of dealing with both parametric uncertainty and heteroscedastic observation noise. What do they mean by parametric uncertainty?.

Paper 006: GAN Q-learning

The abstract says distributional reinforcement learning has empirical success in complex MDPS, in nonlinear function approximation. In this paper they propose a new type of DRL called GAN Q-learning. The performance of the same is analyzed in a tabular environment and Open AI Gym.

Paper 007: QUOTA: The Quantile Option Architecture for Reinforcement Learning

In QUOTA, the decision making is based on quantiles of a value distribution and not only on mean values. QUOTA makes use of both optimism and pessimism of value distribution.

Paper 008: Estimating Risk and Uncertainty in Deep Reinforcement Learning

This paper deals with uncertainties in deep reinforcement learning. They have made use of distributional reinforcement learning and Bayesian inference to deal with uncertainties.

Paper 009: Fully Parameterized Quantile Function for Distributional Reinforcement Learning

The authors state that distributional RL is successful. Parameterising the estimated distributions so as to better approximate the true continuous distribution is a challenge. Existing algorithms parameterize either the probability side or the return value side of the distribution function. In this paper, the authors propose fully parameterized quantile function that parametrizes both the quantile fraction axis (i.e., the x-axis) and the value axis (i.e., y-axis) for distributional RL. They are using neural networks to approximate these functions.