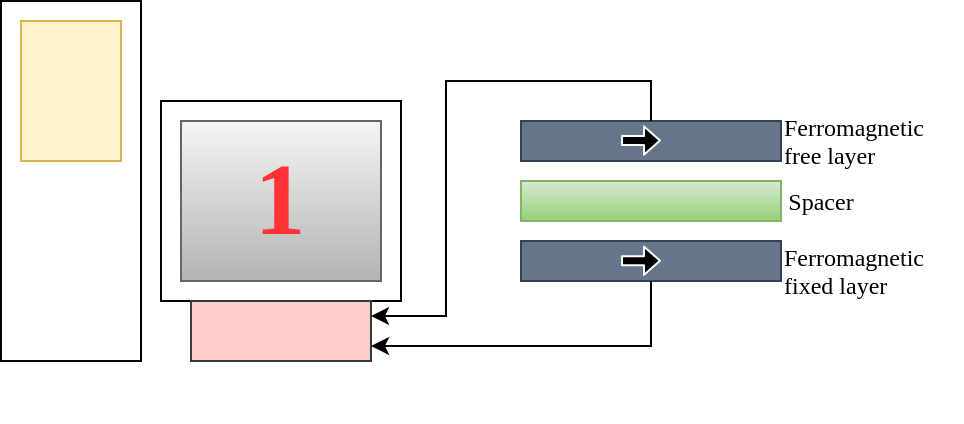

Spin Transfer Torque based Magnetic Memory Devices

Dream for a computer storage device which performs as fast as static RAM (SRAM), delivers the cost effectiveness of dynamic RAM (DRAM), promises the non-volatility of flash memory, combined with added benefits of longevity, denser storage, durability and stability. Let’s discuss whether spin transfer torque based Random access Memory (STT-RAM) devices can help to realize […]

Spin Transfer Torque based Magnetic Memory Devices Read More »